TensorFlow vs PyTorch vs Keras: A Beginner-Friendly Comparison of Deep Learning Frameworks

Whether you’re just stepping into the world of deep learning or already exploring complex neural networks, choosing the right framework is crucial. Among the many, three stand out: TensorFlow, PyTorch, and Keras.

Whether you’re just stepping into the world of deep learning or already exploring complex neural networks, choosing the right framework is crucial. Among the many, three stand out: TensorFlow, PyTorch, and Keras. These tools power research breakthroughs, industrial AI products, and educational curriculums alike.

In this guide, we’ll explore:

- What each framework is and who it’s for

- Their strengths and differences

- Simple example code for each (so you can try them yourself!)

- Guidance on how to choose the right one

Let’s dive in.

What Is a Deep Learning Framework?

A deep learning framework provides tools and abstractions to define, train, and deploy neural networks. Instead of manually implementing backpropagation or matrix multiplications, you define your architecture and the framework takes care of:

- Tensor operations

- GPU acceleration

- Automatic differentiation

- Optimization

- Data loading and batching

1. PyTorch — The Researcher’s Choice

- Developer: Meta (Facebook AI Research)

- Released: 2016

- Philosophy: “Define-by-run” (dynamic graphs)

PyTorch is known for its clean, Pythonic syntax and dynamic computation graph. This makes debugging and model development feel intuitive — like writing regular Python code.

Key Features

- Dynamic computation graph

- Native support for Python control flow

- Easy to debug and experiment

- Preferred by the research community (e.g., Hugging Face models, OpenAI)

Sample Code: Build a Simple Neural Network with PyTorch

import torch

import torch.nn as nn

import torch.optim as optim

# Dummy dataset

x = torch.randn(100, 3)

y = torch.randn(100, 1)

# Define model

class SimpleNet(nn.Module):

def __init__(self):

super().__init__()

self.linear = nn.Linear(3, 1)

def forward(self, x):

return self.linear(x)

model = SimpleNet()

loss_fn = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr=0.01)

# Training loop

for epoch in range(100):

y_pred = model(x)

loss = loss_fn(y_pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print("Final loss:", loss.item())PyTorch has limited native TPU support. Google Colab provides experimental TPU runtime for PyTorch using torch-xla, but beyond that, full production-scale TPU training is more cumbersome compared to TensorFlow.

2. TensorFlow — Built for Production

- Developer: Google Brain

- Released: 2015

- Philosophy: Scalable computation for deployment

TensorFlow was initially famous for its static computation graph (define-then-run). But with TensorFlow 2.x and tf.keras, it introduced eager execution, making it more intuitive like PyTorch.

Key Features

- Wide deployment tools (TF Lite, TF Serving, TF.js)

- Optimized for large-scale training and mobile

- Runs on CPUs, GPUs, TPUs

- Strong visualization with TensorBoard

Sample Code: Simple Neural Network with TensorFlow

import tensorflow as tf

# Dummy data

x = tf.random.normal((100, 3))

y = tf.random.normal((100, 1))

# Define model

model = tf.keras.Sequential([

tf.keras.layers.Dense(1, input_shape=(3,))

])

model.compile(optimizer='adam', loss='mse')

# Train model

model.fit(x, y, epochs=100, verbose=0)

print("Final loss:", model.evaluate(x, y))TensorFlow offers native TPU support via tf.distribute.TPUStrategy. It’s deeply integrated with Google Cloud and optimized for performance on TPU hardware. TensorFlow is the go-to framework for anyone building production-scale training systems on Google infrastructure.

3. Keras — Simplicity First

- Developer: François Chollet (now part of TensorFlow)

- Released: 2015

- Philosophy: Human-centered design

Keras was designed as a high-level wrapper over TensorFlow, Theano, and CNTK. Now it’s fully integrated into TensorFlow (tf.keras). It’s known for its clean, minimal syntax and fast experimentation.

Key Features

- Simple, readable code

- Excellent for teaching and prototyping

- Integrated with TensorFlow for deployment

Sample Code: Same Network Using Keras (via tf.keras)

from tensorflow import keras

from tensorflow.keras import layers

# Dummy data

x = tf.random.normal((100, 3))

y = tf.random.normal((100, 1))

# Model

model = keras.Sequential([

layers.Dense(1, input_shape=(3,))

])

model.compile(optimizer='adam', loss='mse')

model.fit(x, y, epochs=100, verbose=0)

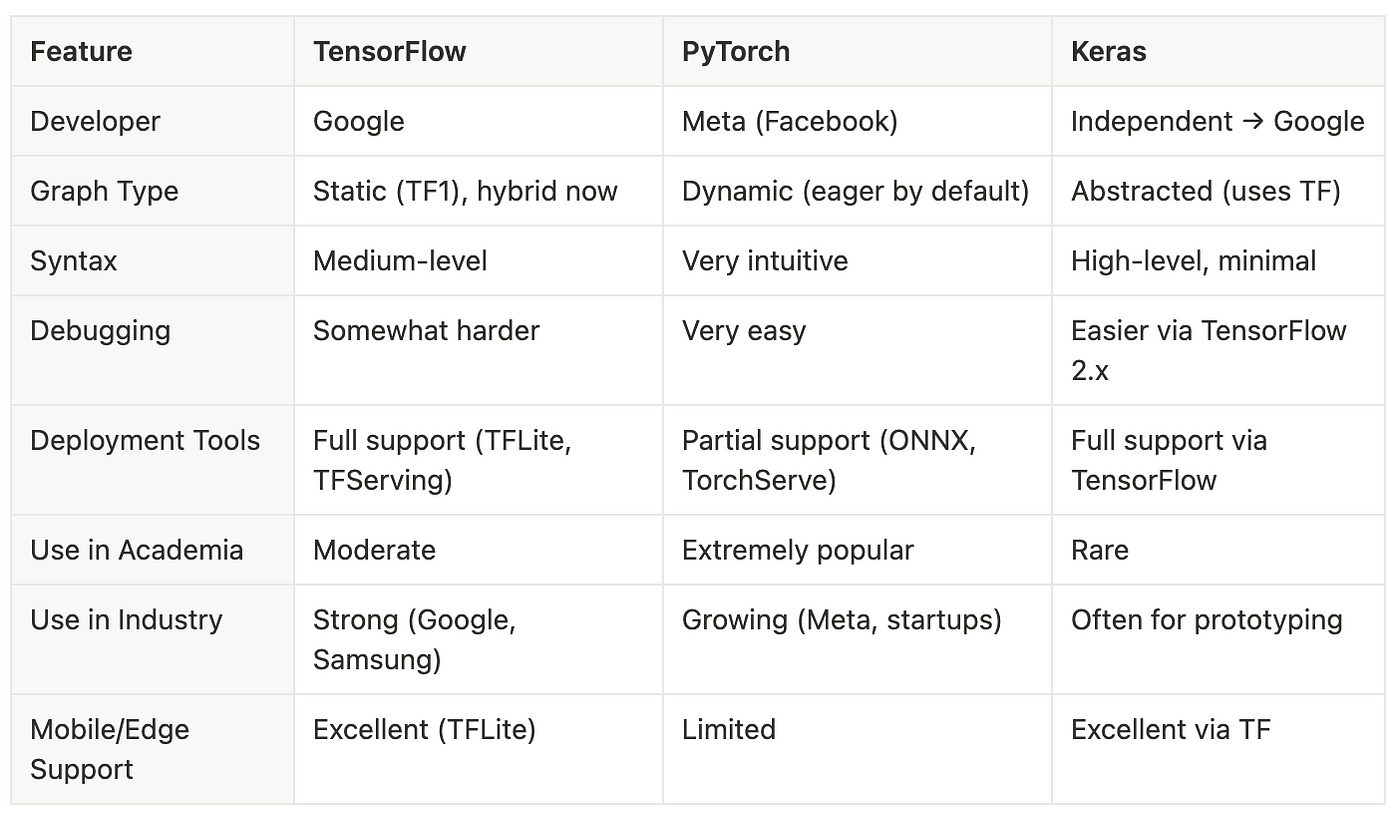

print("Final loss:", model.evaluate(x, y))Comparison Table

So… Which One Should You Use?

- You’re a beginner learning deep learning : Keras

- You want to experiment with custom models: PyTorch

- You need to deploy models to production: TensorFlow

- You’re working on academic NLP/LLM research: PyTorch

- You’re building a mobile AI application : TensorFlow + TFLite

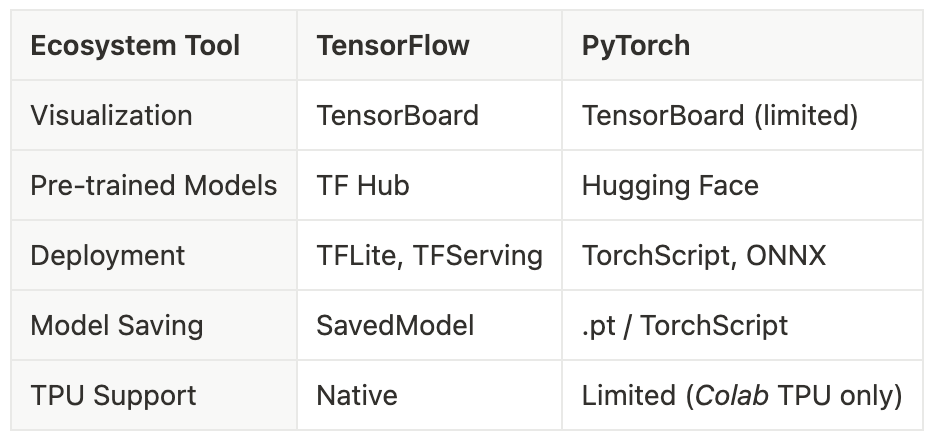

Ecosystem Comparison

ONNX in the Real World

ONNX (Open Neural Network Exchange) is an open standard for model interchange. It allows models trained in PyTorch to be exported and run efficiently in production environments, sometimes even on different frameworks or platforms.

Sample Code: Export a Simple Neural Network to ONNX

import torch

import torch.nn as nn

import torch.optim as optim

import torch.onnx

x = torch.randn(100, 3)

y = torch.randn(100, 1)

class SimpleNet(nn.Module):

def __init__(self):

super().__init__()

self.linear = nn.Linear(3, 1)

def forward(self, x):

return self.linear(x)

model = SimpleNet()

loss_fn = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr=0.01)

for epoch in range(100):

y_pred = model(x)

loss = loss_fn(y_pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Export the trained model to ONNX

example_input = torch.randn(1, 3)

torch.onnx.export(model, example_input, "simple_net.onnx",

input_names=['input'], output_names=['output'],

dynamic_axes={'input': {0: 'batch_size'}, 'output': {0: 'batch_size'}})

print("Model exported to ONNX.")Why ONNX Matters for Enterprises:

- Hardware agnostic: Run PyTorch models on NVIDIA, Intel, or ARM hardware

- Production deployment: Export PyTorch model → ONNX → TensorRT or OpenVINO

- Cross-framework compatibility: Model trained in PyTorch can be used in C++/Java environments

Major companies like Microsoft, Amazon, and Nvidia support ONNX in their AI pipelines. This makes it a powerful bridge between research in PyTorch and production in more optimized environments.

Final Thoughts

- Use PyTorch if you value flexibility, experimentation, and you want to read/reproduce cutting-edge research.

- Use TensorFlow if your focus is on deployment, scalability, or working in a production-grade pipeline.

- Use Keras if you’re new and want to learn deep learning concepts without getting stuck in boilerplate.

The good news? You don’t have to commit forever. Many teams prototype in PyTorch and deploy with TensorFlow, or move between frameworks as needs evolve.

Deep learning is about ideas — frameworks are just tools to bring them to life.