Paper Review - LongDocURL (ACL 2025)

Introduction

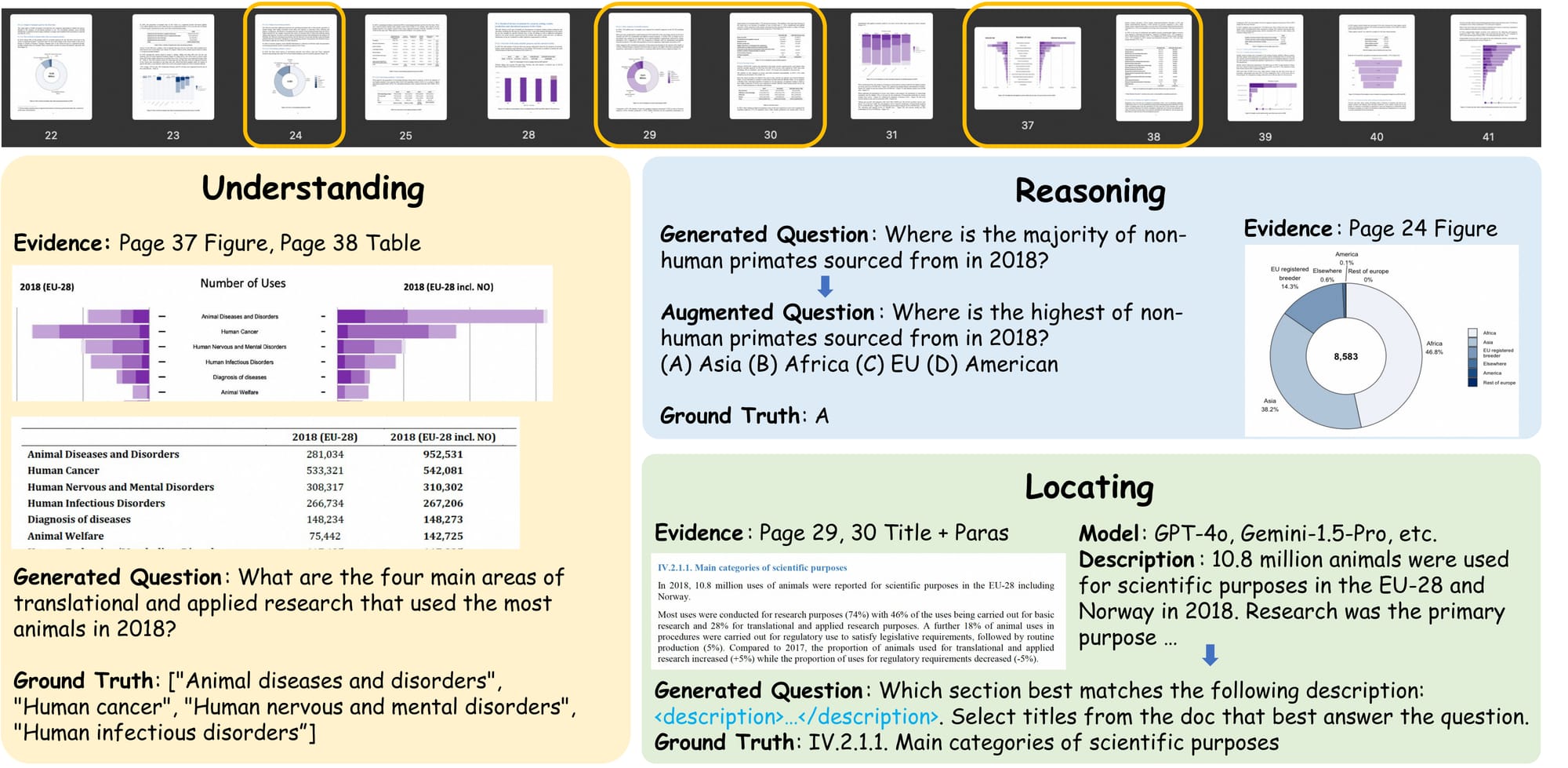

Published at ACL 2025, "LongDocURL: a Comprehensive Multimodal Long Document Benchmark Integrating Understanding, Reasoning, and Locating" addresses important limitations in document understanding evaluation. This paper exposes fundamental gaps in how we test AI models on complex documents.

The research, conducted by a collaborative team from Chinese Academy of Sciences and Alibaba Group, addresses a gap in the field: while large vision-language models (LVLMs) have made progress in document understanding, existing benchmarks have been inadequate for evaluating their capabilities on real-world, long-form documents.

The Problem: Why Current Benchmarks Fall Short

Picture this: You're trying to evaluate how well AI models understand complex documents, but your benchmark only tests single-page documents. It's like testing someone's ability to read a novel by only showing them the cover page. That's exactly the problem the researchers identified with existing document understanding benchmarks.

Most current benchmarks like DocVQA can only handle single-page documents, and many models easily achieve 95%+ accuracy. Meanwhile, real-world documents are often 50-150 pages long, filled with complex layouts, tables, figures, and cross-references that require understanding.

Enter LongDocURL: A New Approach

LongDocURL (Long Document Understanding, Reasoning, and Locating) is a benchmark that addresses these limitations. It provides a comprehensive test for document AI models.

What Makes It Special?

Scale That Matters:

- 2,325 question-answer pairs (not just 3 samples!)

- 396 unique documents covering over 206,943 pages

- 8 diverse document types: research papers, manuals, books, theses, project proposals, presentations, meeting minutes, and work summaries

- Average 89 pages per document (range: 50-150 pages)

- 20 distinct subtask categories for fine-grained evaluation

Three Core Capabilities:

| Primary Task | Composition Criteria | Subtask Count | Characteristics |

|---|---|---|---|

| Understanding | Single/multi-page, single/cross-element based understanding | 8 | Based on text/layout/table/figure evidence types |

| Reasoning | Numerical calculation, statistical summary focused reasoning (classified by page count) | 8 | Composed based on same range evidence criteria |

| Locating | Cross-element relationship reasoning between different element types | 4 | Classified by element combinations (text+table, figure+layout, etc.) |

The Innovation: Cross-Element Locating

Here's where LongDocURL gets really interesting. Traditional benchmarks focus on single elements (just text, just tables, just figures). But real documents require understanding relationships between elements.

For example, imagine a question like: "Which section title best matches this paragraph?" This requires the model to:

- Understand the paragraph content

- Scan through multiple section titles

- Analyze the semantic relationship

- Make a cross-element connection

This is exactly what LongDocURL tests with its innovative cross-element locating tasks.

Task Examples: Input, Output, and Evidence

| Task Type | Input (Question) | Output (Answer) | Evidence (Source) |

|---|---|---|---|

| Understanding | What section best matches the description: <description>... |

IV.2.1.1. Main categories of scientific purposes |

Page 29–30, Section title + paragraph |

| Reasoning | Which scientific area used the most animals in 2018? (with options A-D) |

A. Animal diseases and disorders |

Page 37 figure, Page 38 table |

| Locating | Where is the figure showing usage located? |

Page 24, Figure 1 |

Page 24 figure location information |

The Technical Magic: How They Built It

A Semi-Automated Pipeline That Actually Works

Building a benchmark of this scale manually would be difficult. The researchers created a four-stage pipeline:

Stage 1: Extract & Filter

- Crawled 200k PDF documents from CommonCrawl

- Filtered for documents with 50-150 pages and English content

- Used GPT-4o to classify document types

- Final selection: 396 documents

Stage 2: QA Generation

- Converted PDFs to "text-type-bbox" triples (text content, element type, bounding box)

- Used multi-step iterative querying with GPT-4o

- Generated questions across all 20 sub-tasks

Stage 3: Automated Verification

- Checked task relevance, format correctness, and faithfulness

- Identified and flagged problematic samples

- Some tasks had up to 75% negative samples initially!

Stage 4: Human Verification

- Human annotators reviewed automated results

- Cross-checked each other's work

- Recovered negative samples where possible

The Smart Classification System

Each question gets classified along multiple dimensions:

- Task Type: Understanding (53.5%), Reasoning (16.6%), Locating (29.9%)

- Evidence Elements: Text (42.8%), Layout (33.5%), Table (23.9%), Figure (37.5%)

- Page Scope: Single-page (47%) vs Multi-page (53%)

- Element Scope: Single-element (62.9%) vs Cross-element (37.1%)

This creates 20 distinct sub-tasks, allowing for granular analysis of model capabilities.

The Data: What's Actually Inside

Question Type Distribution

The dataset includes 9 different question types, with extract being the most common (53.5%):

- extract: 1,243 questions - Direct information extraction

- extract_fig2tab: 231 questions - Figure to table conversion

- topic2title: 201 questions - Topic to title matching

- calculate: 145 questions - Numerical calculations

- summary2title: 137 questions - Summary to title matching

- summary2tab: 126 questions - Summary to table matching

- count: 117 questions - Counting tasks

- compare: 112 questions - Comparison tasks

- summarize: 13 questions - Summarization tasks

Answer Format Distribution

- String: 1,882 answers (40.5%) - Text responses

- List: 1,514 answers (32.6%) - Multiple items

- Integer: 862 answers (18.5%) - Whole numbers

- Float: 370 answers (8.0%) - Decimal numbers

- None: 22 answers (0.5%) - Unanswerable questions

Evidence Sources

- Text: 1,988 (42.8%) - Pure text content

- Table: 1,742 (37.5%) - Tabular data

- Layout: 1,558 (33.5%) - Headers, titles, footers

- Figure: 1,112 (23.9%) - Charts and images

How They Tested: The 3-Stage Evaluation Protocol

| Stage | Process | Input Example | Output Example |

|---|---|---|---|

| 1. Response Generation | Models generate free-form answers | "Which scientific area used the most animals in 2018?" |

"I think the answer is Animal diseases and disorders, based on the chart on page 37." |

| 2. Answer Extraction | GPT-4o extracts concise final answers | "I think the answer is the USA, based on the second paragraph." |

"USA" |

| 3. Score Calculation | Compare with ground truth using generalized accuracy | Extracted answer vs Ground truth | 1 (correct) or 0 (incorrect) |

Answer Type Scoring Rules

| Answer Type | Scoring Rule | Example |

|---|---|---|

| String | Exact or relaxed match | "USA" vs "U.S.A." |

| Integer | Numeric match | 2020 |

| Float | Numeric match with tolerance | 16.8 |

| List | Set or subset match | ["Asia", "Africa"] |

| None | Null/empty response for unanswerable questions | null |

Input Methods: Image vs Text

| Input Method | Description | Performance (GPT-4o) | Pros | Cons |

|---|---|---|---|---|

| Image Input (Cut-off) | 30 continuous pages around answer evidence (LongDocURL default) | 64.4 | Preserves visual structure, tables, figures, layout | Requires more memory, slower inference |

| OCR Text (PyMuPDF) | Plain text extraction | 36.5 | Lightweight, faster | Loses table/chart layout & formatting |

| OCR Text (Docmind) | Markdown-aware OCR | 66.2 | Retains some structure (markdown tables) | Still inferior to full image |

Why Image Input Wins

The structural information loss in OCR text is significant:

- Table formatting disappears

- Figure-text relationships break

- Layout cues are lost

- Cross-element reasoning becomes nearly impossible

The 20 Subtask Categories: A Deep Dive

Understanding Tasks (8 subtasks)

- MP_Text_Understanding: 443 questions - Multi-page text extraction

- SP_Table_Understanding: 263 questions - Single-page table parsing

- SP_Text_Understanding: 259 questions - Single-page text extraction

- MP_Figure_Understanding: 174 questions - Multi-page figure analysis

- MP_Layout_Understanding: 172 questions - Multi-page layout parsing

- MP_Table_Understanding: 115 questions - Multi-page table analysis

- SP_Figure_Understanding: 94 questions - Single-page figure analysis

- SP_Layout_Understanding: 91 questions - Single-page layout parsing

Reasoning Tasks (8 subtasks)

- MP_Text_Reasoning: 115 questions - Multi-page text calculations

- SP_Table_Reasoning: 98 questions - Single-page table calculations

- MP_Figure_Reasoning: 85 questions - Multi-page figure calculations

- MP_Table_Reasoning: 69 questions - Multi-page table calculations

- MP_Layout_Reasoning: 40 questions - Multi-page layout calculations

- SP_Text_Reasoning: 40 questions - Single-page text calculations

- SP_Figure_Reasoning: 28 questions - Single-page figure calculations

- SP_Layout_Reasoning: 12 questions - Single-page layout calculations

Locating Tasks (4 subtasks)

- Figure_Table_Locating: 231 questions - Finding figure-table relationships

- Cross_Title_Locating: 201 questions - Cross-referencing titles

- Para_Title_Locating: 137 questions - Paragraph-title matching

- Cross_Table_Locating: 126 questions - Cross-table relationships

Open-Source Model Reality Check

Context Length Limitations

| Model | Context Length | Max Pages (Image) | Max Pages (Text) | Notes |

|---|---|---|---|---|

| Qwen2.5-VL-7B | 32K tokens | ~8-12 pages | ~16 pages | Best open-source performance |

| LLaVA-1.5-7B | 4K-32K tokens | ~4-8 pages | ~8-16 pages | Variable context length |

| LLaVA-Next-7B | 32K tokens | ~8-12 pages | ~16 pages | Improved version |

| Llama-3-8B | 8K tokens | N/A (text-only) | ~2-3 pages | Text-only model |

Practical Solutions for Open-Source Models

| Solution | Description | Pros | Cons |

|---|---|---|---|

| Chunking | Split 30-page documents into 8-page chunks | Handles long documents within context limits | May lose cross-chunk context |

| Selective Processing | Focus on evidence pages only (e.g., page 54 from 42-71 range) | Efficient, targeted processing | May miss relevant context |

| Hybrid Approach | OCR text + selective image processing | Balanced performance and efficiency | Complex implementation |

| Sliding Window | Process overlapping 8-page windows | Maintains some context continuity | Increased computational cost |

Chunking Strategy Example

For a 30-page document (pages 42-71) with evidence on page 54:

Option 1: Simple Chunking

- Chunk 1: Pages 42-49 (8 pages)

- Chunk 2: Pages 50-57 (8 pages) ← Evidence page 54

- Chunk 3: Pages 58-65 (8 pages)

- Chunk 4: Pages 66-71 (6 pages)

Option 2: Evidence-Centered Chunking

- Focus chunk: Pages 50-57 (8 pages) ← Contains evidence page 54

- Context chunks: Pages 42-49, 58-65 for additional context

The Performance Reality Check

After testing 26 different model configurations, here's what they found:

The Winner (Sort Of):

- GPT-4o scored 64.5 points - the only model to meet the "passing standard"

- But even this top performer has room for improvement

The Open-Source Reality:

- Best open-source model: Qwen2-VL with just 30.6 points

- Most open-source models with <13B parameters scored below 20 points

- That's a 2x performance gap between proprietary and open-source models

The Text vs Image Input Surprise:

- Text-input models performed significantly worse than image-input models

- Top LLM score trailed top LVLM score by about 30 points

- Why? Because converting documents to plain text loses crucial structural information

Where Models Struggle Most

Document Structure Parsing:

- Models scored highest on pure text questions

- Lowest scores on table-related questions

- This highlights a major weakness in document structure understanding

Cross-Element Relationships:

- 37.1% of questions require understanding relationships between different elements

- Models consistently struggle with these cross-element tasks

- This is exactly where LongDocURL exposes current limitations

Multi-Page Reasoning:

- Surprisingly, single-page questions were harder than multi-page questions

- Why? Because multi-page questions often have more context to work with

- But models like GPT-4o actually performed worse on multi-page locating tasks

The Error Analysis: What's Really Going Wrong?

The researchers conducted a detailed error analysis on 97 failed cases from GPT-4o. The results reveal the real bottlenecks:

Perceptual Errors (32.7%):

- The biggest problem: models can't accurately recognize or parse document elements

- Issues with heading hierarchies, figure-text correspondences

- Complex document structures remain a major challenge

Reasoning Errors (16.8%):

- Even when evidence is correctly identified, models fail at calculation and comparison

- Shows that understanding ≠ reasoning

Format Inconsistency (20.6%):

- Models give correct answers but in wrong formats

- Example: "$50.2 million" vs "50212000" - same answer, different format

- Highlights the inflexibility of rule-based evaluation

Other Issues (29.9%):

- Hallucinated evidence, irrelevant answers, incomplete evidence

- Shows models sometimes "make up" information when they can't find it

Key Insights for Model Development

Multimodal Training Matters:

- Qwen2-VL (multimodal) significantly outperformed its text-only variant

- Extensive multimodal training strengthens both comprehension and generation

Human Feedback Helps:

- LLaVA-OneVision-Chat (with DPO and human feedback) outperformed its base model

- Direct Preference Optimization boosts generalization and reasoning

Layout Parsing is Critical:

- Frequent failures due to poor layout analysis and table/chart parsing

- Incorporating layout parsing into training could significantly improve performance

- Multi-stage frameworks (parse structure first, then reason) might be the way forward

Why This Benchmark Changes Everything

The Scale Revolution

- 10x more pages than existing benchmarks

- 2x more samples than MMLongBench-Doc

- 8 diverse document types vs single-type focus

The Complexity Revolution

- 53% multi-page questions (vs single-page focus)

- 37% cross-element questions (vs single-element focus)

- Real reasoning tasks (vs simple extraction)

What This Means for the Future

For Researchers

This benchmark finally provides a realistic testbed for long document understanding. No more inflated performance numbers from easy single-page tasks. This is the real deal.

For Industry

Companies building document AI systems now have a proper benchmark to evaluate their models. The 2x performance gap between proprietary and open-source models is a clear call to action.

For Open Source

The poor performance of open-source models (best score: 30.6 vs GPT-4o's 64.5) shows there's massive room for improvement. This could drive significant investment in open-source document understanding research.

The Bottom Line

LongDocURL provides a comprehensive benchmark that shows current limitations in document understanding, despite progress in large language models.

The fact that GPT-4o scores 64.5 points on this benchmark indicates that document understanding remains challenging, and better benchmarks are needed to measure progress.

Key Takeaways:

- Cross-element relationships are important - 37% of questions require understanding relationships between different document elements

- Structure matters - Text-only models perform 30 points worse than vision-language models

- Open-source models need improvement - The 2x performance gap presents a research opportunity

- Perceptual errors are the biggest bottleneck - 33% of errors come from poor document element recognition

This benchmark provides a comprehensive evaluation framework for document understanding. Models that perform well on LongDocURL are likely to handle real-world document tasks effectively.

The benchmark contributes to advancing document AI research.

References:

- GitHub Repository

- arXiv Paper - "LongDocURL: a Comprehensive Multimodal Long Document Benchmark Integrating Understanding, Reasoning, and Locating"

- ACL 2025 Proceedings - Presented at ACL 2025

- Institutions: Chinese Academy of Sciences, Alibaba Group