Model Context Protocol (MCP): Shaping the Future of AI Agents

The Model Context Protocol (MCP) is an innovative protocol designed to enhance AI model interactions through advanced context management. This blog post explores what MCP is, how it works, and how developers can leverage its capabilities using the Python client example.

The Model Context Protocol (MCP) is an innovative protocol designed to enhance AI model interactions through advanced context management. This blog post explores what MCP is, how it works, and how developers can leverage its capabilities using the Python client example.

What is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is a sophisticated protocol that enables more effective communication between applications and AI models by managing contextual information. Unlike standard API calls that might lose context between interactions, MCP provides a structured approach to maintaining conversational history and context, allowing for:

- Persistent context management across multiple AI model interactions

- Efficient handling of conversation history

- Controlled flow of contextual data

- Enhanced AI response quality through better context awareness

- Framework-agnostic implementation for various AI models

MCP acts as a communication layer between applications and AI models, ensuring that relevant context is properly maintained, transmitted, and utilized throughout interactions.

How MCP Works

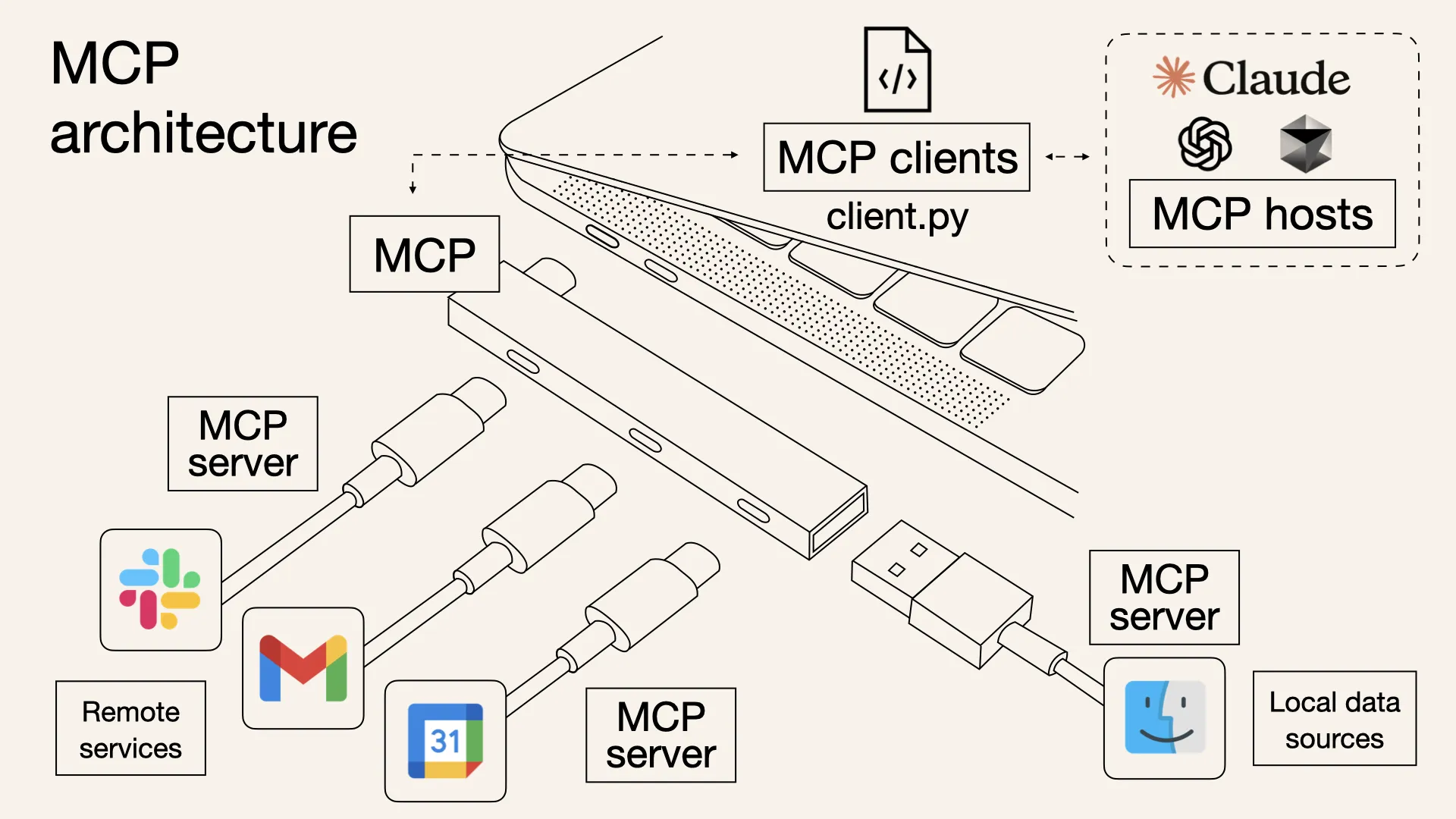

At its core, MCP operates through a client-server architecture where:

- Client Applications

- Your interface (e.g., chatbot, web app) that captures user input and displays responses.

- MCP ServerA backend service responsible for:

- Storing and managing session contexts

- Handling prompt completion requests

- Communicating with AI models

- AI Model Layer

- The language models or other AI systems that generate responses.

- Can be OpenAI, HuggingFace, Anthropic, etc. MCP doesn’t depend on any specific model.

- Context Lifecycle Management

- Contexts are created, updated, and queried throughout the lifecycle of a session

The protocol handles several key processes:

- Context Management

- Tracking and organizing conversation history and contextual information

- Sessions retain memory across turns.

- Request Handling: Formatting and sending requests to AI models with appropriate context

- Tool Calling: Models like Claude 3 or GPT-4 can dynamically invoke custom tools.

- Request Routing: User queries are enriched with context and routed through MCP.

- Response Processing

- Receiving, processing, and returning model responses to client applications

- Response Composition: Final output includes model-generated text and optional tool results.

- Context Updates: Updating the stored context based on new interactions

- Connection Management: Maintaining stable connections between clients and the server

The Python Client Example

The GitHub repo provides a functional example of how to connect to an MCP server, process queries, and manage conversation context.

Background

Anthropic API

The Anthropic API is a cloud-based service that allows developers to interact with Claude, a family of large language models (LLMs) developed by Anthropic. Similar to OpenAI’s GPT models, Claude is designed to:

- Answer questions

- Generate content

- Interpret instructions

- Perform reasoning tasks

- Call tools (in the Claude 3.5 API) via tool-use messages

In this Python example, the Anthropic class is used to send structured conversations to Claude 3.5 Sonnet, receive AI responses, and interact with tools based on the conversation context.

Asynchronous Architecture

This project uses asynchronous programming via Python’s asyncio module and async def functions. This style of architecture is especially useful when:

- You are managing multiple I/O-bound tasks (e.g., sending/receiving API calls)

- You want to avoid blocking operations (such as waiting for tool results or Claude’s response)

- You need to scale up concurrent processing

For example, await self.session.call_tool(...) allows the program to pause while waiting for the tool result, without freezing the entire app. This makes the client highly responsive and scalable even with multiple ongoing tasks.

Graceful Shutdown

In long-running apps like this MCP client, you need to release resources (e.g., subprocesses, open connections) when the app ends. This is called a graceful shutdown.

In the code:

await self.exit_stack.aclose()

This command closes:

- The MCP session connection

- The communication pipe to the server process

- Any pending tool operations

A graceful shutdown avoids:

- Zombie processes: a process that has completed execution but still has an entry in the process table. In Unix-like operating systems (including Linux), when a process finishes, it doesn’t immediately disappear — it leaves behind some information (its exit status) so that its parent process can read it. If the parent never “cleans up” by calling wait() on the finished process, the child process becomes a zombie.

- Memory leaks

- Broken connections

It’s good practice in any production-grade async app.

A production-grade asynchronous application refers to a software system that:

- Uses asynchronous programming (like async/await, asyncio, or other non-blocking frameworks),

- Is built to run reliably and efficiently in production environments, not just for testing or demos.

Features

- Claude 3.5 Sonnet integration via the Anthropic API

- Custom tool execution via MCP server

- Session-aware query handling

- Asynchronous architecture with graceful shutdown

Imports and Initialization

import asyncio

from typing import Optional

from contextlib import AsyncExitStack

from datetime import datetime

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from anthropic import Anthropic

from dotenv import load_dotenv

- Loads environment variables (.env) for API keys.

- Uses AsyncExitStack for managing resources.

- Integrates the Claude API via Anthropic.

Class: MCPClient

class MCPClient:

def __init__(self):

self.session = None

self.exit_stack = AsyncExitStack()

self.anthropic = Anthropic()

- Holds the Claude client and session with the MCP server.

connect_to_server()

async def connect_to_server(self, server_script_path: str):

...

- Accepts a .py or .js path to run the MCP server locally.

- Establishes a stdio_client transport and wraps it in a ClientSession.

- Lists available tools (Python functions) exposed by the server.

Example:

python client.py ./server.py

process_query()

async def process_query(self, query: str) -> str:

...

Main logic:

- Sends the initial user query to Claude using messages.create().

- If Claude tries to call a tool:

- Executes it using session.call_tool()

- Sends the tool result back to Claude

- Gets the next reply based on that result

- Saves tool output to a file like tool_result_2025-05-16 13:00:00.txt

💡 This loop continues if multiple tools are called.

chat_loop()

async def chat_loop(self):

...

- Accepts queries from the user and prints model responses

- Terminates on "quit"

- Interactive CLI loop

- Command-Line Interface (CLI): a text-based interface that lets users interact with a program by typing commands in a terminal or shell (like Bash, zsh, or Windows Command Prompt), instead of using graphical elements like buttons or menus. It looks like:

> What is the capital of France?

Paris

> How many moons does Jupiter have?

79

> quit

cleanup()

async def cleanup(self):

await self.exit_stack.aclose()

- Ensures the session and subprocesses are closed cleanly.

main()

async def main():

...

- Parses the CLI argument for the server script path.

- Creates an MCPClient, connects to the server, and starts the loop.

To run:

python script.py ./server.py

Example Interaction

User:

How do I calculate compound interest?

Claude:

To calculate compound interest, you can use the formula: A = P(1 + r/n)^(nt)

[Calling tool 'math_tool' with args {P: 1000, r: 0.05, n: 4, t: 2}]

Tool Result:

Final amount after 2 years is $1,104.94

Claude Final:

Using the tool result, your compound interest calculation yields $1,104.94 over 2 years.

MCP Architecture Recap

[ User ]

↓

[ MCP Client ]

↓

[ MCP Server ]

├──→ [ Claude API ] (LLM-based response generation)

└──→ [ Tools ] (e.g., calculator, search, code interpreter)

| Component | Role |

|---|---|

| User | Inputs a query |

| MCP Client | Sends the query to the server |

| MCP Server | Dispatches the request to the appropriate backend |

| Claude API | Large Language Model for answering natural language queries |

| Tools | Specialized modules (e.g., calculator, web search, code execution) |

Practical Applications of MCP

MCP enables numerous applications that benefit from improved context management:

Conversational AI Systems

- Chatbots with better memory of previous exchanges

- Virtual assistants that maintain context across sessions

- Customer service solutions with coherent conversation flows

- Multi-turn dialog systems with improved contextual awareness

Content Generation

- Long-form writing assistants that maintain thematic consistency

- Code generation tools with awareness of project context

- Content creation platforms with persistent style and tone

- Multi-stage creative workflows with context retention

Enterprise Solutions

- Knowledge management systems with contextual query capabilities

- Data analysis tools with persistent analysis context

- Business intelligence applications with conversation memory

- Internal documentation systems with contextual search

Development Tools

- IDE integrations that understand code context

- Documentation generators with project awareness

- Debugging assistants with execution context

- Test generation tools with system understanding

Getting Started with MCP

To begin working with MCP, you'll need:

- Access to an MCP server or setup instructions for deploying one

- API credentials for authentication

- A client library such as the Python example

- Basic understanding of context management in AI interactions

The Python client example repository provides a solid starting point, with documentation and code examples demonstrating the core functionality.

The Architecture Behind MCP

As shown in the YouTube video demonstration by Alejandro AO, the MCP architecture typically consists of:

- Client Libraries

- Client SDK (e.g., Python client)

- Language-specific implementations to interact with the MCP server

- API Gateway

- MCP Gateway/API Layer

- Managing requests and authentication

- Context Service

- Context Store (e.g., Redis, MongoDB)

- Storing and retrieving conversation contexts

- Model Interface

- Model Inference Server (OpenAI, HuggingFace, etc.)

- Connecting to various AI models

- Persistence Layer

- Logging and Observability Tools

- Storing conversations and contexts for future reference

This architecture allows MCP to provide a unified interface for context management across different AI models and applications.

Best Practices for MCP Development

When working with MCP, consider these best practices:

- Structure conversations effectively: Organize interactions to maximize context utility

- Manage context size: Be mindful of token limits and context windows

- Implement proper error handling: Prepare for connection issues or model errors

- Consider context persistence: Determine appropriate lifetimes for different types of contexts

- Secure sensitive information: Be careful about what information is included in contexts

Conclusion

The Model Context Protocol represents a significant advancement in how applications interact with AI models. By providing structured context management, MCP addresses one of the key limitations of traditional API approaches to AI model integration.

The Python client example serves as an excellent introduction to MCP development, demonstrating the key concepts and providing a foundation that developers can build upon for their own applications. Whether you're developing conversational systems, content generation tools, or enterprise solutions, MCP provides the infrastructure needed to maintain consistent, context-aware interactions with AI models.

As AI systems continue to evolve and become more integrated into applications, technologies like MCP will play an increasingly important role in ensuring that these interactions are coherent, contextually appropriate, and efficient.

Happy coding!