Activation Functions with Numpy

Activation Function

Activation Function

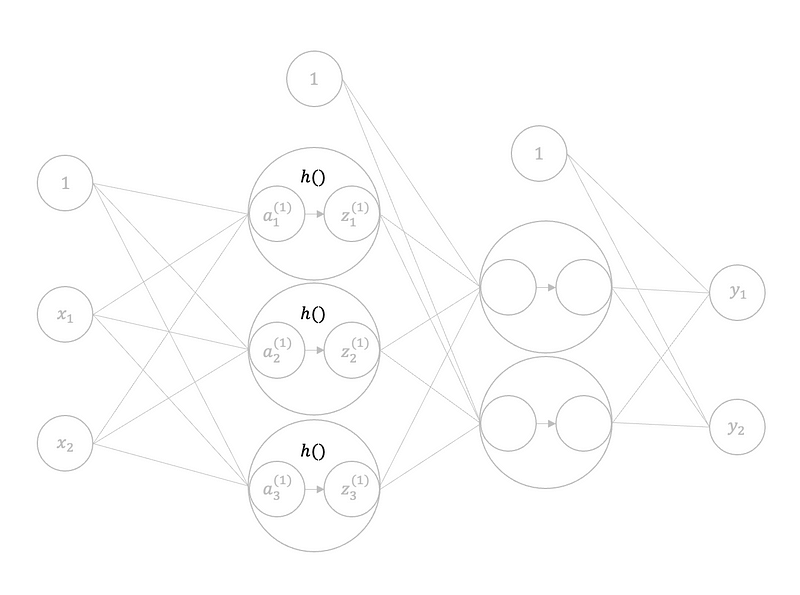

The activation function is a mathematical “gate” in between the input feeding the current neuron and its output going to the next layer. The activation function of a node defines the output of that node given an input or set of inputs, which decides whether the neuron should be activated or not. It helps the network learn complex patterns in the data. We will see three types of activation functions: step function, sigmoid function and ReLU function.

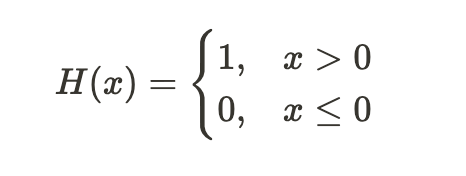

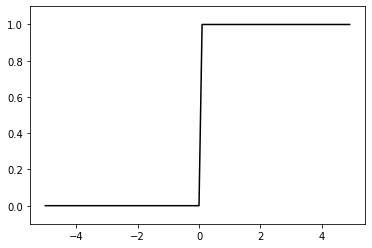

Step Function

A step function(Heaviside step function) produces binary output (that is the reason why it also called as binary step function). The function produces 1 (or true) when input passes threshold limit whereas it produces 0 (or false) when input does not pass threshold.

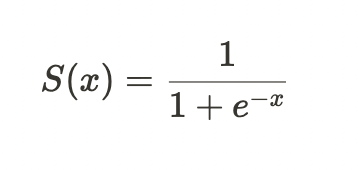

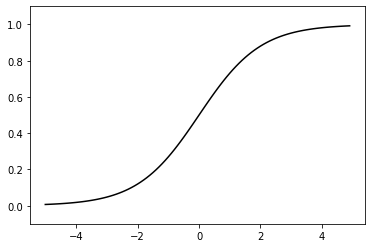

Sigmoid Function

A sigmoid function or logistic function has a characteristic S-shaped curve or sigmoid curve. A common example of a sigmoid function is the logistic function. At x = 0, y is equal to 0.5 and when x is a very large positive number, y is close to 1, and when x is a very large negative number, y is close to zero.

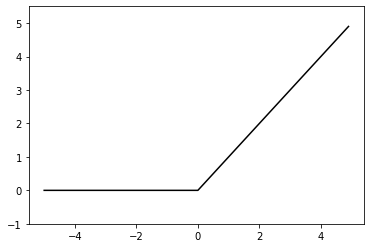

ReLU Function

ReLU(rectified linear activation function) Function is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. It does not activate all the neurons, which means only a few neurons are activated, making the network sparse and very efficient. This is why ReLU is the most widely used activation function.

Application

Let’s apply these functions to a simple network.

Hope this article serves the purpose of getting idea about the activation function :)